AI is showing up across nonprofit data systems and many professionals are grappling with an important question: What can AI help us with and what still relies on humans?

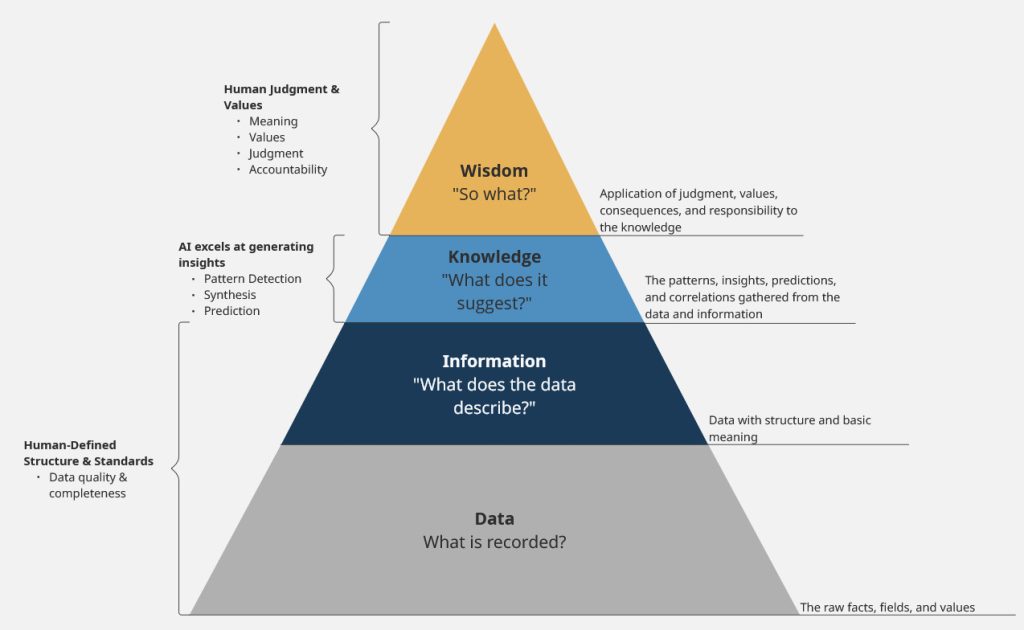

This is exactly what we should be asking as a sector, especially as AI becomes more embedded in the systems we rely on every day. The DIKW Pyramid, sometimes called the Knowledge Pyramid, offers a practical way to answer this question and understand where AI excels and where human responsibility remains essential.

If this is a new framework for you, no worries! DIKW stands for Data, Information, Knowledge, and Wisdom. It’s a model that helps us understand how raw data becomes insight and where judgment and responsibility come into play.

- Data – What is recorded?

The raw facts, fields, and values - Information – What does the data describe?

Data with structure and basic meaning - Knowledge – What does it suggest?

The patterns, insights, predictions, and correlations gathered from the data and information - Wisdom – So what?

Application of judgment, values, consequences, and responsibility to the knowledge

Data & Information: The Foundations of Meaning

Let’s dig into an example to see what the different components look like.

Here are some data points: “Stephen”, “39”, “Toronto”, “Yorkshire Terrier”, [My annual salary]

On their own, these data don’t tell us anything. Each is just a string of letters or numbers. However, if you apply classification for these data, you can start to derive meaning. So, “Toronto” becomes City = Toronto. When you combine that with First Name = Stephen, and Age = 39, you can start to paint a picture of a specific person at a specific location and point in life.

So far, there isn’t much here for AI to help with. Sure, AI tools may be able to assist in classification and summarization tasks. But, the ownership of data classification still lives with us, the people using the information system.

Knowledge: Where AI Shines

Knowledge is where AI starts to become useful. What does this information suggest?

- Stephen is likely an adult professional living in an urban environment

- Based on a number of factors, including his annual salary, his giving capacity is likely around $X per year

- His daily routines are likely shaped by being a dog owner (it’s true – it’s Lucy’s world, I just live in it!)

AI excels in this knowledge layer. It’s here that you can:

- Craft a compelling, personalized email that speaks directly to my experience

- Dynamically recommend a monthly gift amount that aligns with my likely giving capacity

- Predict how strongly connected I am to your cause to help prioritize outreach and engagement

Any LLM will generate an output in response to a vague prompt. That’s because these models are trained on tons of data and information. Those outputs can feel off, though, because they lack your data and context. When your own data and information are part of the input, AI-generated outputs start to resonate more. They reflect real experiences, relationships, and history. The quality of our data and information directly impacts the quality of the knowledge AI produces.

Wisdom: The “So What?” AI Can’t Answer

Which brings us to the final level: wisdom.

In other words, “So What?“

AI can’t replace human wisdom. Wisdom is about asking if we should act on this knowledge at all. What does this mean for the communities we serve? Who is accountable when something is wrong? (and AI gets some things wrong)

This is where we apply our values, context, and responsibility.

What We Cannot Delegate to AI

So what does this mean in practice?

As AI becomes ingrained in our lives and throughout our organizations, we need to be aware of what AI can do well and what we cannot rely on it for. Let’s focus on the things we cannot delegate to AI:

- Data Quality & Completeness

We own the quality and completeness of our data. If I’m in your CRM, but still listed as living in Montréal, shown as 33 instead of 39, or missing relevant notes about my interests, context, and engagement with your organization, AI will still generate summaries, detect patterns, and recommend actions, but these outputs will miss the mark. In other words, your knowledge will be shaky because the data and information inputs it’s based on aren’t right. - The “So What?”

We own the judgment. We’re the ones engaged with communities and partners. We understand context, equity, and impact. It’s on us to decide how knowledge is used, what responsible use looks like, and where accountability sits. We have agency and we need to use it.

Closing Reflection

AI doesn’t replace our responsibility for our data or how we use it. In fact, it makes that responsibility even more important.

As AI becomes more embedded in the systems nonprofits rely on every day, the organizations that benefit most will be the ones that invest in clear, consistent data practices, shared understanding, and thoughtful judgment.

AI is a powerful generator of knowledge. It’s still on us to ensure the foundations are strong and complete, and to decide what that knowledge means for our work and the communities we serve.